TLDR: We propose a game-theoretic attention mechanism for robots that filters relevant agents using a neural network trained via differentiable game solvers.

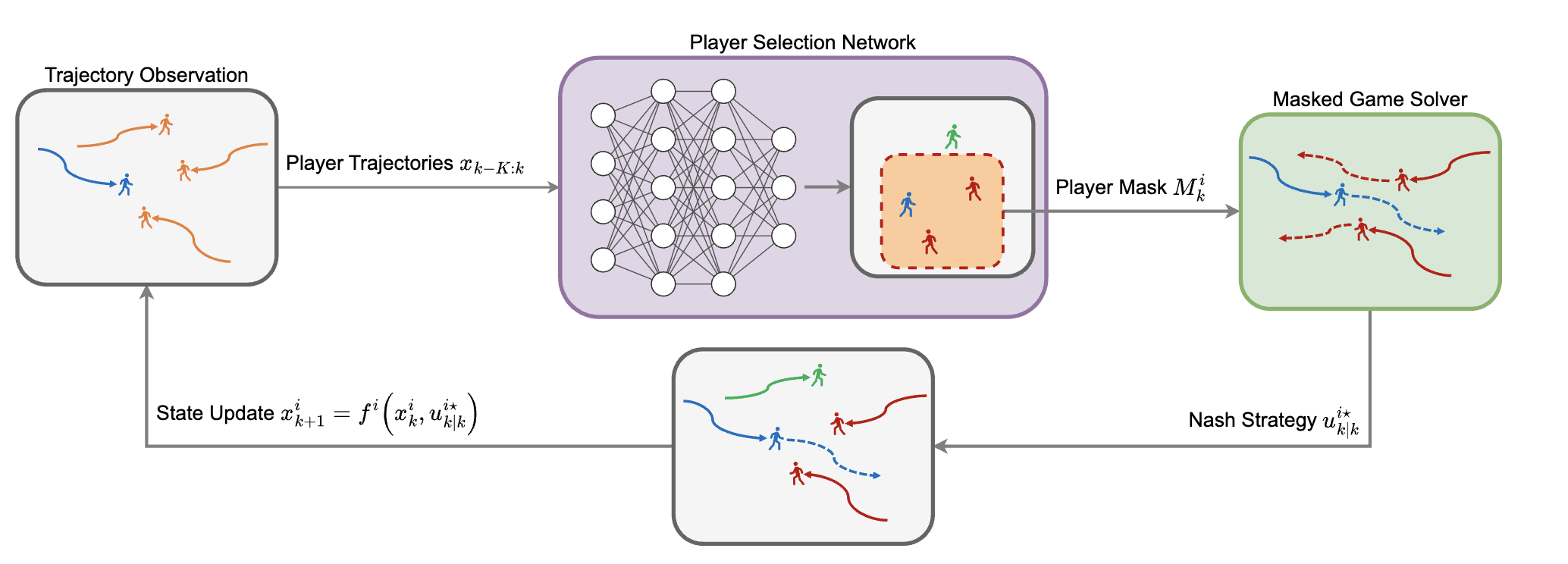

Abstract: Game-theoretic planning frameworks excel at modeling multi-agent interactions but scale poorly — solving games with hundreds of players is slow and often impractical for real-time use. We introduce PSN Game, a novel framework that learns to ignore irrelevant agents by training a Player Selection Network (PSN). The PSN outputs a mask that selects only the most influential agents, allowing the ego player to solve a smaller, masked game.

This approach slashes runtime by reducing the dimensionality of the optimization problem — no hand-tuned heuristics or full game state required. The PSN operates purely on past observed trajectories and is trained end-to-end using a differentiable game solver.

We show that PSNs: • Outperform baselines in trajectory quality (length and smoothness), • Maintain safety, • Cut runtime by 10x, • And generalize to real-world data without fine-tuning.

By dropping irrelevant players, PSNs act as a game-theoretic attention mechanism — making large-scale multi-agent planning fast, robust, and scalable.

Links: paper